AEDA ( An Easier Data Augmentation Technique for Text Classification )

A new data augmentation method proposed for text classification.

- Paper resources

- Objective

- Why do we need augmentations?

- What are the different kind of augmentations used in NLP?

- What is the novel idea presented in the paper?

- Can you share an example of how this augmentation would work?

- How many punctuation marks are inserted?

- Why one-third of the sentence length?

- At which positions should we insert these punctuation marks?

- What are the different punctuation marks used?

- Why does AEDA work better compared to EDA?

- Implementation

- Dataset

- Load libraries

- Define paths and constants

- Load dataset

- Class Distribution

- Splitter

- Tokenize the training dataset

- Using fast.ai Pipeline to construct Dataset

- AEDA data augmentation as fast.ai transform

- Construct dataloaders

- Using the classic TextCNN model introduced by Yoon Kim, paper

- Define learner

- References

- This paper proposes a new data augmentation technique for text classification task.

- It also compares the performance of this augmentation technique with EDA: Easy Data Augmentation Techniques for Boosting Performance on Text Classification Tasks and concludes that their method is simpler and produces better results.

- In this experiment we will try to implement this data augmentation using

fastaion a text classification task.

- To have better generalizability, we need more and more comprehensive datasets but collection of these datasets and labelling is a laborious task so augmentation becomes an attractive method to introduce more examples for model to consume.

What are the different kind of augmentations used in NLP?

- For improving machine translation task, researchers have tried substituting common words with rare words thus providing more context for rare words.

- Some researchers have tried replacing words with their synonyms for tweet classification.

- Randomly swap two words in a sentence.

- Randomly delete a word in the sentence and many more.

- Between 1 to

n/3wherenrepresents the length of the sentence.

The authors mention that they want to increase the complexity of the sentence but doesn't want to add too many punctuation marks which would interfere with the semantic meaning of the sentence.

- The authors inserted them at random positions in the sentence.

. ; ? : ! ,

Why does AEDA work better compared to EDA?

- EDA proposes synonym replacement, random replacement, random insertion and random deletion. These modifications could change the semantic meaning of the text.

- Whereas AEDA just introduces punctuation marks which would only introduce noise and would not mess the semantic meaning or the word ordering.

Dataset

- We will be using this dataset used in this challenge where the goal is to predict the subreddit of a subreddit post based on their title and their description. This is an example of

text categorization/text classificationtask

import pandas as pd

import numpy as np

from pathlib import Path

from tqdm import tqdm

from fastai.text.all import *

SEED = 41

BASE_DIR = Path('~/data/dl_nlp')

RAW_DATA_PATH = BASE_DIR / 'data'

OUTPUT_DIR = Path('~/data/dl_nlp/outputs')

PUNCTUATIONS = ['.', ',', '!', '?', ';', ':']

PUNC_RATIO = 0.3

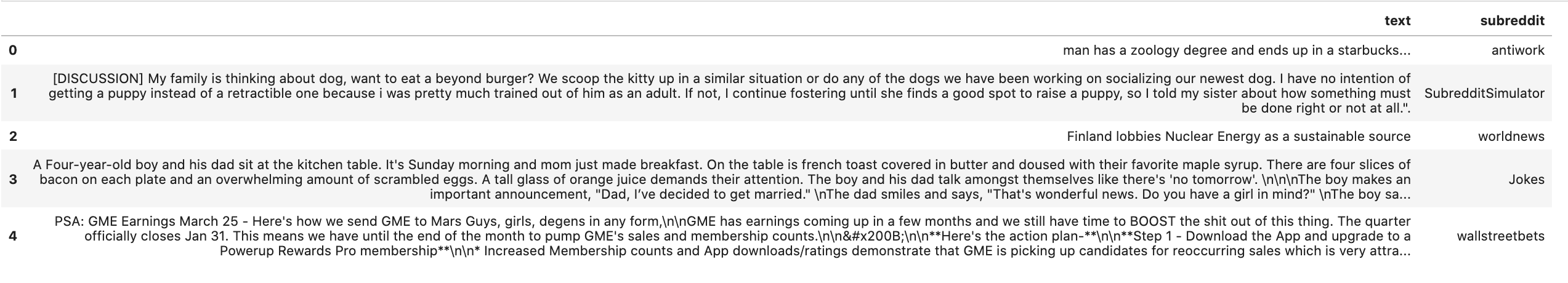

train = pd.read_csv(RAW_DATA_PATH / 'train.csv')

train.head()

- Text column represents

titleas well asdescription. - Subreddit column represents our

label.

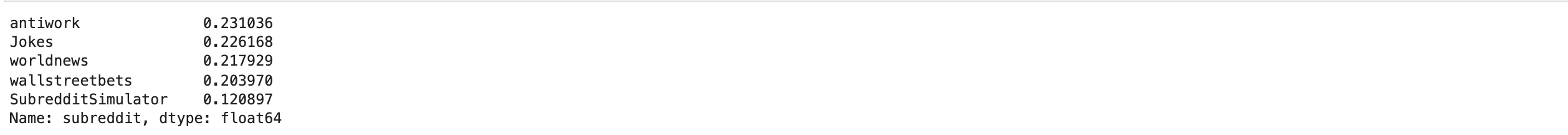

train.subreddit.value_counts(normalize=True)

- We have multiple categories that our model needs to get right.

- Most of the categories have similar percentage of data points in the dataset, with only

SubredditSimulatorcategory having less training examples.

splits = RandomSplitter(seed=41)(train)

- Create a splitting strategy.

- Here we plan to split our training dataframe randomly into training ( 80% ) and validation ( 20% ) datasets.

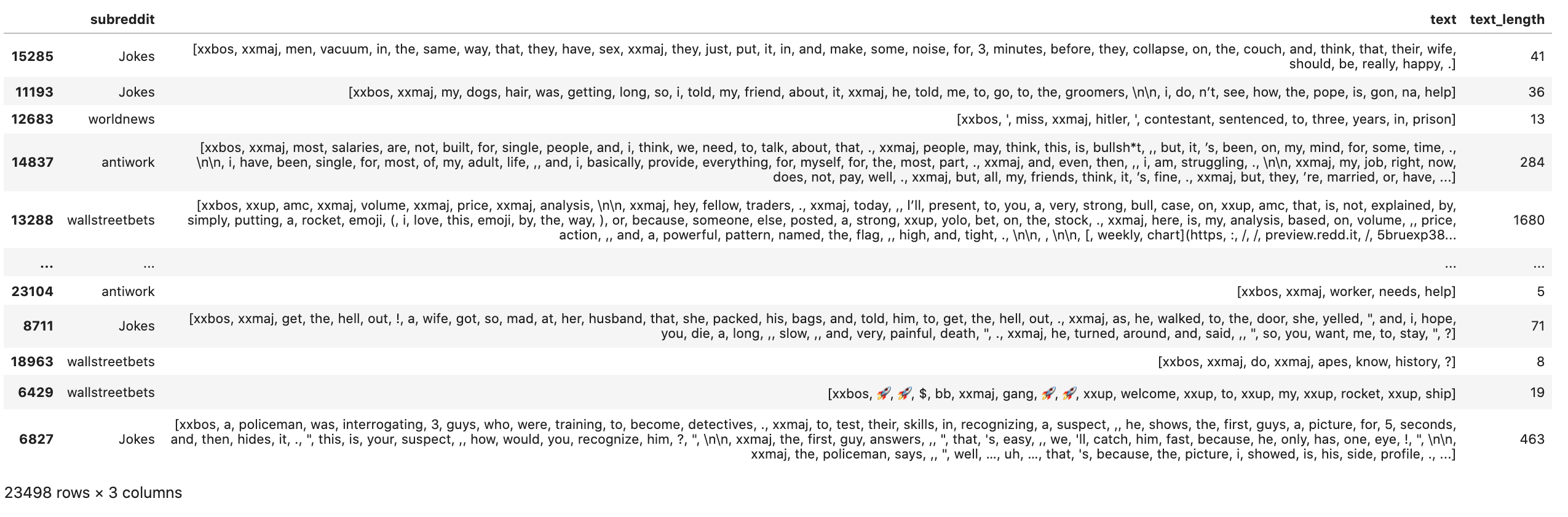

df_tok, cnt = tokenize_df(train.iloc[splits[0]], text_cols='text')

- Fast.ai provides a method to tokenize our dataset.

- Here we only passing our training examples as the corpus for tokenizer to create vocabulary.

- We could pass in different types of tokenizers here but by default it works with

WordTokenizer.

df_tok

- Here we could see that it has split our

textstring into tokens and created an additional column calledtext_lengthdescribing the length. - It has also added some library specific tokens like

xxbos,xxmajetc.xxbosrepresents beginning of the sentence token. For more details please refer to fast.ai

cnt

- Here is a snapshot of the vocabulary constructed by the

tokenize_dfmethod.

text_pipe = Pipeline([attrgetter('text'), Tokenizer.from_df(0), Numericalize(vocab=list(cnt.keys()))])

lbl_pipe = Pipeline([attrgetter('subreddit'), Categorize()])

lbl_pipe.setup(train.subreddit)

dsets = Datasets(train, [text_pipe, lbl_pipe], splits=splits, dl_type=SortedDL)

- Here we use

Pipelineprovided byfast.aito put together different transforms we want to run on our dataframe. -

text_piperepresents the Pipeline that we would like to run on ourtextcolumn in the dataframe. -

lbl_piperepresents the Pipeline that we would like to run on oursubredditcolumn in the dataframe. -

Numericalizetransform takes in our vocabulary and converts the tokens to ids. -

Categorizetransforms converts our labels to categories. -

Tokenizer.from_dftransform tokenizes the text stored in our dataframe.

np.random.seed(0)

PUNCTUATIONS = ['.', ',', '!', '?', ';', ':']

PUNC_RATIO = 0.3

class InsertPunctuation(Transform):

split_idx = 0

def __init__(self, o2i, punc_ratio=PUNC_RATIO):

self.o2i = o2i

self.punc_ratio = punc_ratio

def encodes(self, words:TensorText):

new_line = []

q = random.randint(1, int(self.punc_ratio * len(words) + 1))

qs = random.sample(range(0, len(words)), q)

for j, word in enumerate(words):

if j in qs:

new_line.append(self.o2i[PUNCTUATIONS[random.randint(0, len(PUNCTUATIONS)-1)]])

new_line.append(int(word))

else:

new_line.append(int(word))

return TensorText(new_line)

- We have taken the implementation from the github shared by the authors and created a

fast.aitranform that would take in thePUNC_RATIOando2ias parameters and inserts punctuations at random positions in the sentence. -

PUNC_RATIOby default takes a value of0.3which represents the1/3rdof the sentence length mentioned in the paper. -

o2iis mapping between token to token_id.

seq_len = 72

dls_kwargs = {

'after_item' : InsertPunctuation(dsets.o2i),

'before_batch': Pad_Chunk(seq_len=seq_len)

}

dls = dsets.dataloaders(bs=32, seq_len=seq_len, **dls_kwargs)

- When creating

fast.aidataloders we could perform operations on some of the events emitted. - Here we have made use of two such events,

after_itemcallback is used to run our augmentation and add punctuation marks. -

before_batchcallback is used to make sure that we have paddded the tokens to make sure they are of same size before we collate them to form a batch.

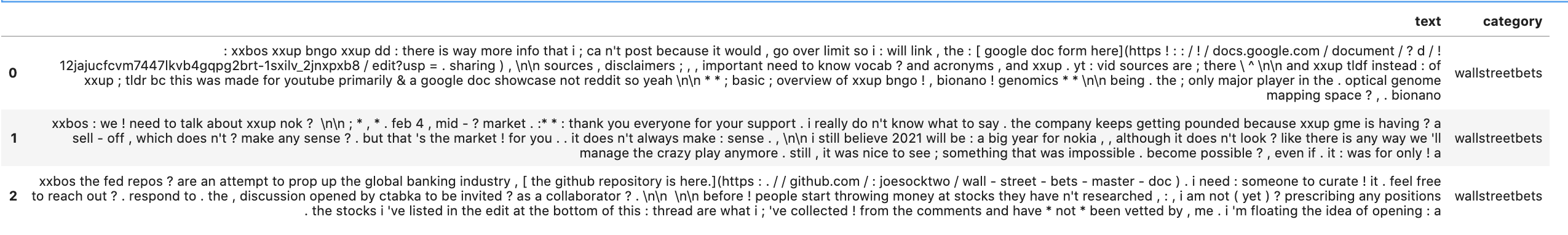

dls.show_batch(max_n=3)

-

dls.show_batchgives a glimpse of the batch

Using the classic TextCNN model introduced by Yoon Kim, paper

class TextCNN(Module):

def __init__(self, n_embed, embed_dim, num_filters, filter_sizes, num_classes, dropout=0.5, pad_idx=1):

store_attr('n_embed,embed_dim')

self.embed = nn.Embedding(num_embeddings=n_embed,

embedding_dim=embed_dim,

padding_idx=1

)

self.convs = nn.ModuleList([

nn.Conv2d(in_channels=1,

out_channels=num_filters,

kernel_size=(k, embed_dim)

)

for k in filter_sizes

])

self.dropout = nn.Dropout(dropout)

self.relu = nn.ReLU()

self.fc = nn.Linear(num_filters * len(filter_sizes), num_classes)

def _conv_and_pool(self, x, conv):

x = self.relu(conv(x)).squeeze(3)

x = F.max_pool1d(x, x.size(2)).squeeze(2)

return x

def forward(self, x):

out = self.embed(x)

out = out.unsqueeze(1)

out = torch.cat([self._conv_and_pool(out, conv) for conv in self.convs], 1)

out = self.dropout(out)

out = self.fc(out)

return out

vocab = dls.train_ds.vocab

num_classes = get_c(dls)

model = TextCNN(len(vocab[0]),

embed_dim=300,

num_filters=100,

filter_sizes=[1, 2, 3],

num_classes=num_classes,

)

learn = Learner(dls, model, metrics=[accuracy, F1Score(average='weighted')])

- Using

F1 score weightedmetric for multi-class classification.

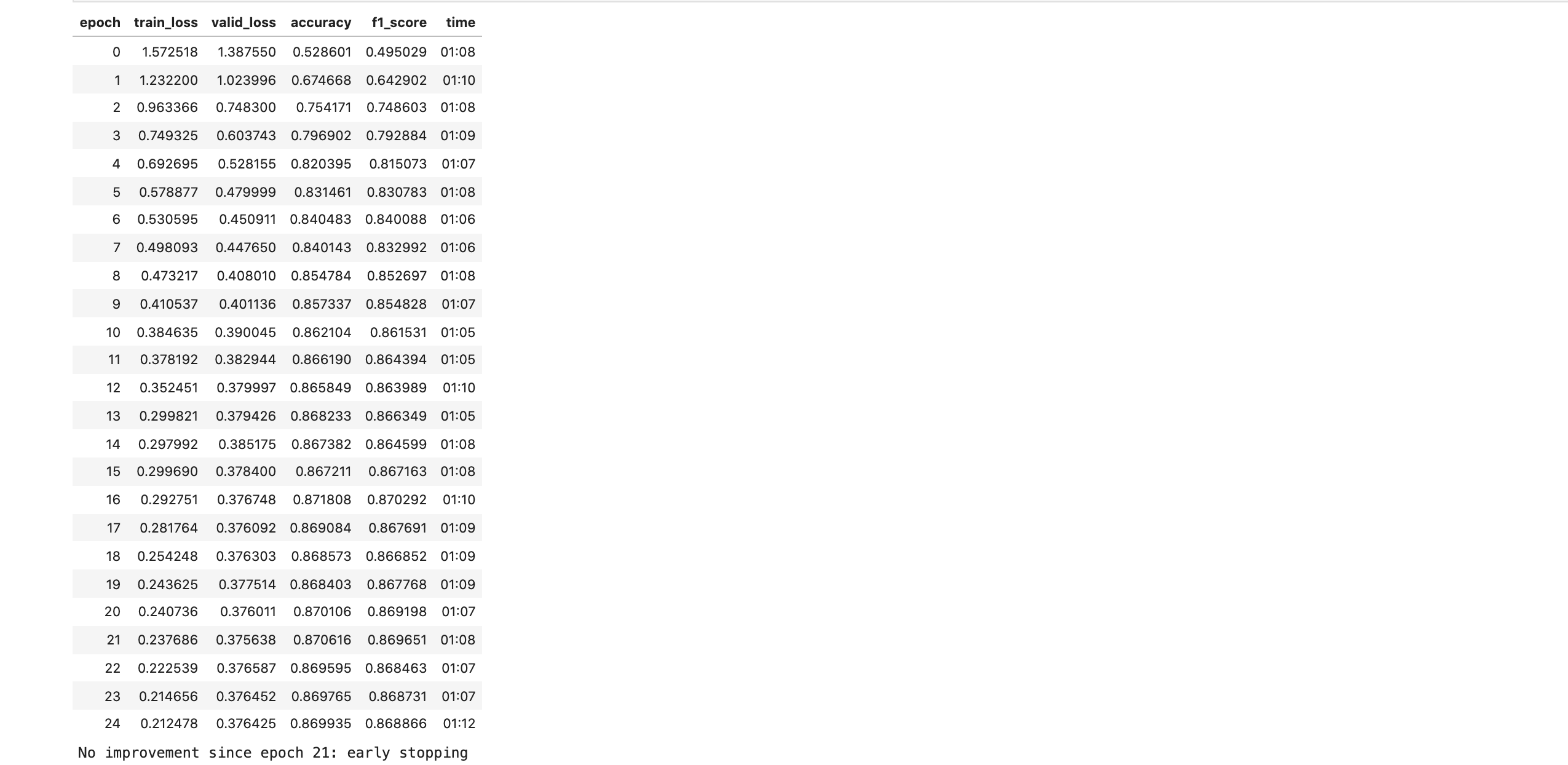

learn.fit_one_cycle(n_epoch=25, lr_max=3e-4, cbs=EarlyStoppingCallback(patience=3))

- We are getting a

F1( weighted )score of0.869without using anypre-trainedembeddings.